- Difference between APIs & web scraping

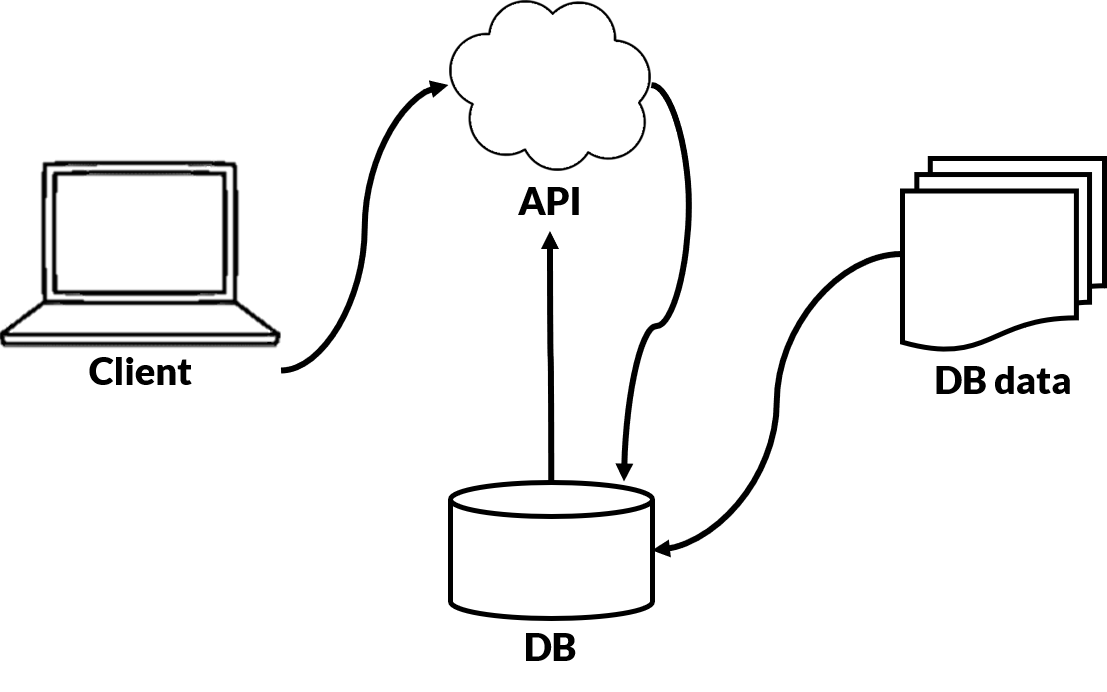

- How to extract data via an API

- How to determine if a website is using an API

- How to scrape data

Note: examples will use R

14.10.2021

Note: examples will use R

This will be provide data simply by searching the URL in your browser

But can be also easily read by a program like R or Python

url <- "https://apisidra.ibge.gov.br/values/t/6579/n3/all/v/9324?formato=json" pop <- jsonlite::fromJSON(url) # read json

sidrarlibrary(sidrar)

Which will return the exact same data with less hassle

pop_sidrar <- get_sidra(6579, variable = 9324,geo="State") #extract IBGE data

Often websites use APIs to access the data they are presenting, although this may not be obvious

To determine this you need to use the inspector panel & other developer tools

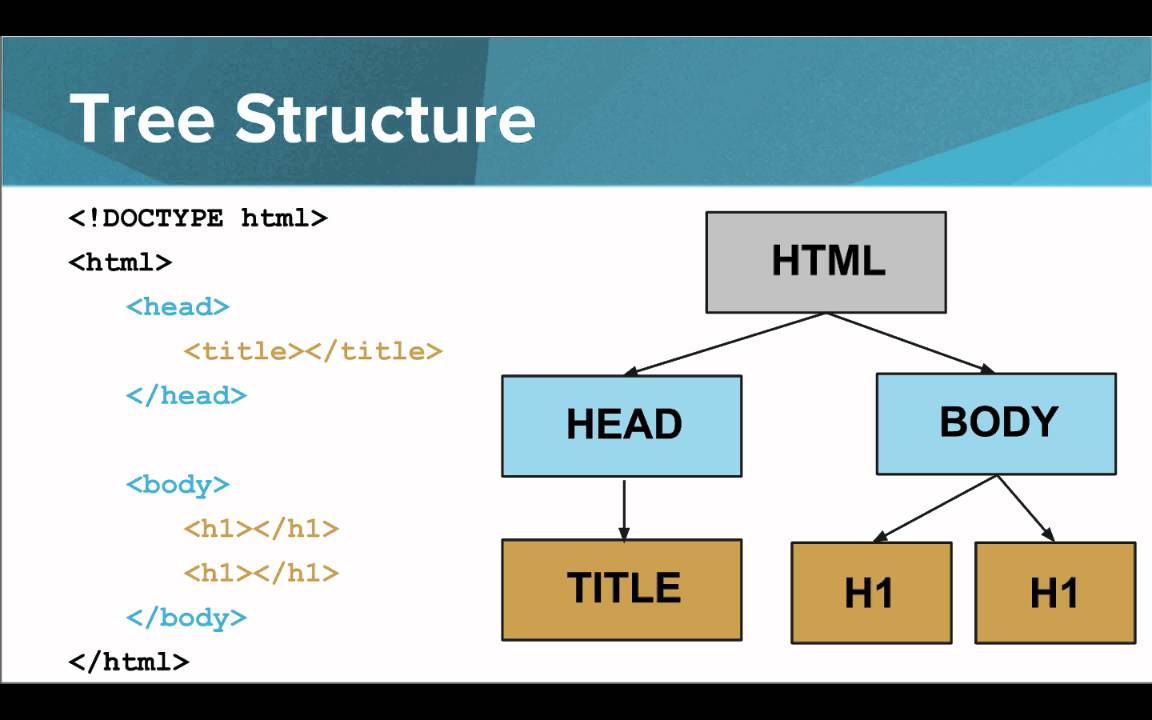

Element tab will help you find which HTML nodes containing the desired data

Network tab will help you find out whether the website is drawing its data from external sources (e.g. an API) and where to find these sources. In this case, Barry Hallebaut is accessing data from https://services1.arcgis.com

url2 <- "https://services1.arcgis.com/gASdGGCiDRjdrOYB/arcgis/rest/services/Cooperatives_and_Districts_February_2021/FeatureServer/0/query?f=geojson&where=1%3D1&returnGeometry=true&spatialRel=esriSpatialRelIntersects&outFields=*&maxRecordCountFactor=4&outSR=102100&resultOffset=0&resultRecordCount=8000&cacheHint=true&quantizationParameters=%7B%22mode%22%3A%22view%22%2C%22originPosition%22%3A%22upperLeft%22%2C%22tolerance%22%3A1.0583354500042335%2C%22extent%22%3A%7B%22xmin%22%3A-8.34314999999998%2C%22ymin%22%3A2.908540000000073%2C%22xmax%22%3A12.520860000000027%2C%22ymax%22%3A7.605490000000032%2C%22spatialReference%22%3A%7B%22wkid%22%3A4326%2C%22latestWkid%22%3A4326%7D%7D%7D" data_sf <- geojsonsf::geojson_sf(url2) #read geojson as simple feature shapefile

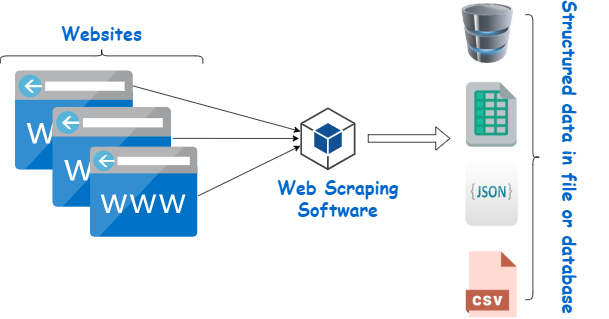

Only scrape a website if:

Scraping is done through three key steps:

<tag#id.class>

Agrolink is a Brazilian agro-business company that tracks prices of various commodities over time, incl. soy

library(rvest) # read necessary R packages library(xml2) url3 <- "https://www.agrolink.com.br/cotacoes/historico/mt/soja-em-grao-sc-60kg" html <- read_html(url3) # stage 1: get HTML node <- html_element(html,".table-striped.agk-cont-tb1") # stage 2: extract elements dat_scrape <- html_table(node,dec=",") # stage 3: read as data table

url4 <- "https://www.cargill.com/sustainability/palm-oil/managing-grievances"

html2 <- read_html(url4) #stage 1

# scrape company names (dropdown headers)

headers <- html_elements(html2,".showhide-header") # stage 2

comps <- html_text2(headers, preserve_nbsp=TRUE) # stage 3

#scrape grievance issue per company & per grievance entity

grievance <- html2 %>%

html_elements(".mod-content") %>% #extract node for each company

html_text(trim=T) %>% #extract text

as.list() %>% #convert to list

map(str_split,pattern="Issue Under Review: ",simplify=T) %>% #split at issue

map(function(x){x[x!=""]}) %>% #drop empty strings

map(function(x){map(x,str_split,pattern="\n\n",n=3,simplify=T)}) #split at \n\n 3x

#attach company names to grievance data

names(grievance) <- comps[comps!=""]

## levelName ## 1 Root ## 2 ¦--Aceydesa ## 3 ¦ °--1 ## 4 ¦--Cargill Tropical Palm ## 5 ¦ ¦--1 ## 6 ¦ ¦--2 ## 7 ¦ °--3 ## 8 ¦--Felda Global Ventures ## 9 ¦ ¦--1 ## 10 ¦ ¦--2 ## 11 ¦ ¦--3 ## 12 ¦ °--4 ## 13 °--Golden Agri-Resources ## 14 °--1

# convert from nested list to longform dataframe with reshape2::melt

dat_scrape2 <- grievance %>%

reshape2::melt(level=2) %>%

pivot_wider(id_cols=c(L2,L3), #reshape to wide

names_from = Var2,

values_from = value) %>%

separate(`3`,into = c("Other_info","Action_taken"),

sep = "Actions Cargill Has Taken to Date") %>%

separate(`2`,into=c("Entity","Date","Status"),

sep = "\n|-|–") %>%

rename(c("Supplier"=L2,"Subsupplier"=L3,"Grievance"=`1`))

purrr::map in R)lists in R)purrr::possibly)Sys.sleep)polite package (makes it easier to identify yourself & your intentions while scraping)

note: code used to create this presentation can be found on my GitHub

rvest - main package for scraping websites in R (uses xml2 & httr)xml2 - parses XML & HTML(Languages used for encoding data on the web). XML is an alternativehttr - makes http requests (e.g. to an API) easierRSelenium - advanced scraping tool that allows you to create virtual browser (e.g. to get around logins)data.tree - package for working with/visualising tree structured data (like XML/HTML)polite - scraping sites politelyjsonlite - read json data into R easilygeojsonio - read geojson data into R easilytidyverse - group of packages to make coding easier, includes purrr::map() as well as %>% and tidyr::pivot_wider used here